AI models are possibility space explorers

Z3 is a text editor for fiction built around a single insight: LLMs are possibility space explorers. The point of writing fiction with LLMs isn't to take the first output, or the tenth. The point is to explore the space of possible stories — all the directions a narrative could take — so that the human can decide which is most interesting. Imagination tends to be the limiting factor in creative work, and LLMs have imagination in abundance.

I built Z3 as a solo project, starting from my own frustrations with existing AI tools. The interfaces were poorly suited to creative writing, treating LLMs as text generators rather than collaborative partners for exploring ideas.

The problem

I interviewed hobbyist writers and found two recurring problems. First, writer's block — which is really an imagination bottleneck. You're stuck at a point in the narrative, unable to see a path forward. If you haven't experienced it, it's a bit like English has become an arcane language and you have become a monkey. You remember fondly a time when words flowed from your fingertips like Jägermeister in Vegas, but your mind is now just banana.

Second, a lack of feedback. Most hobbyists work alone, without the editor or friend who reflects ideas back and helps refine early drafts. LLMs are a natural fit for both of these problems, and they became the foundation of Z3.

Inline completions with guidance

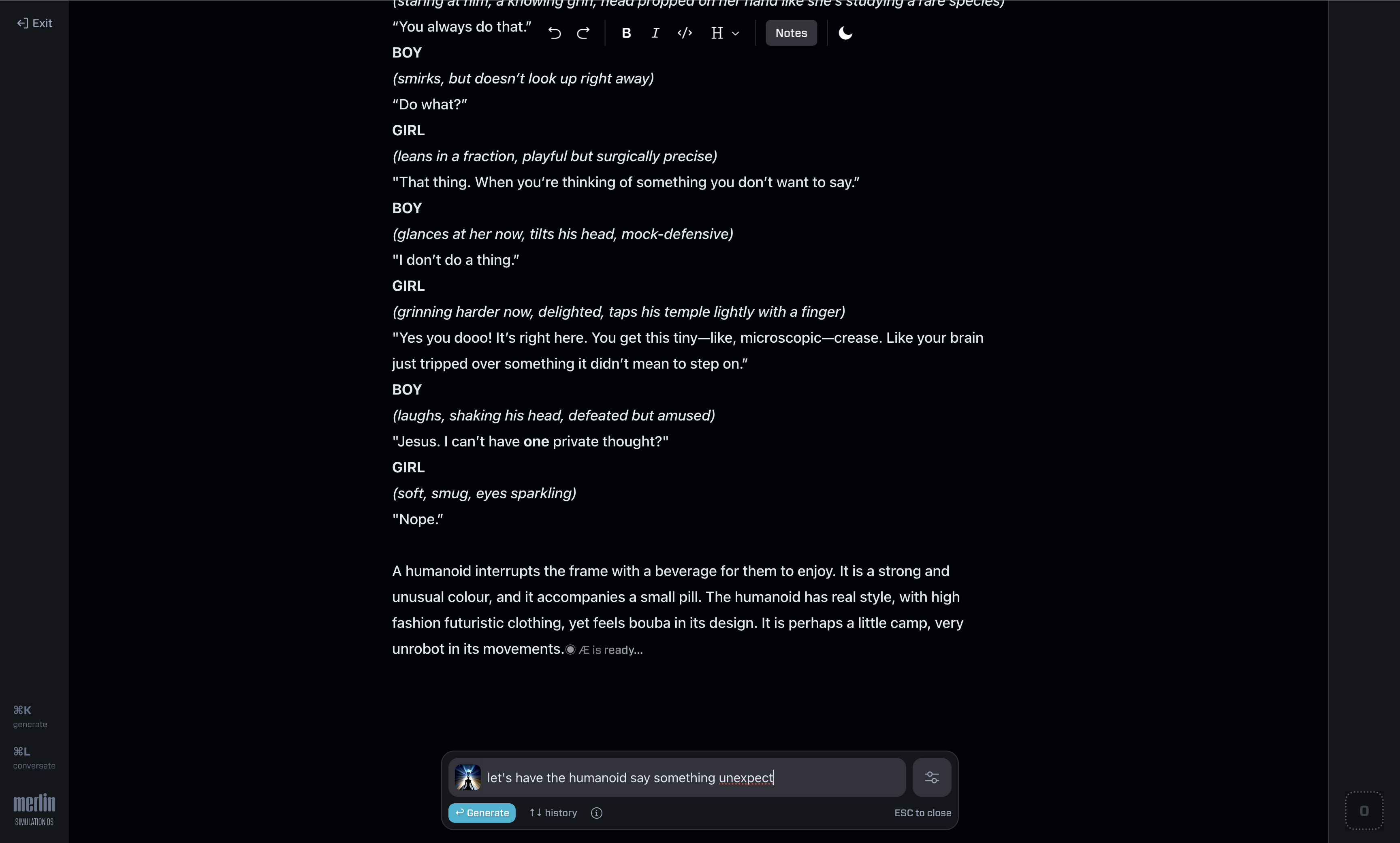

The first interaction I designed was guided completions. Asking an LLM to write the next 50–100 words is intuitive, but LLMs can be wildly random. Even in the middle of an Elizabethan love story, Claude might introduce a quantum anomaly that opens up a tear in the fabric of spacetime. Fun once, tiring by the third time.

To solve this, completions are activated via a CMD+K menu where users provide guidance: plot ideas, character notes, thematic direction — anything that aligns the model with your thinking.

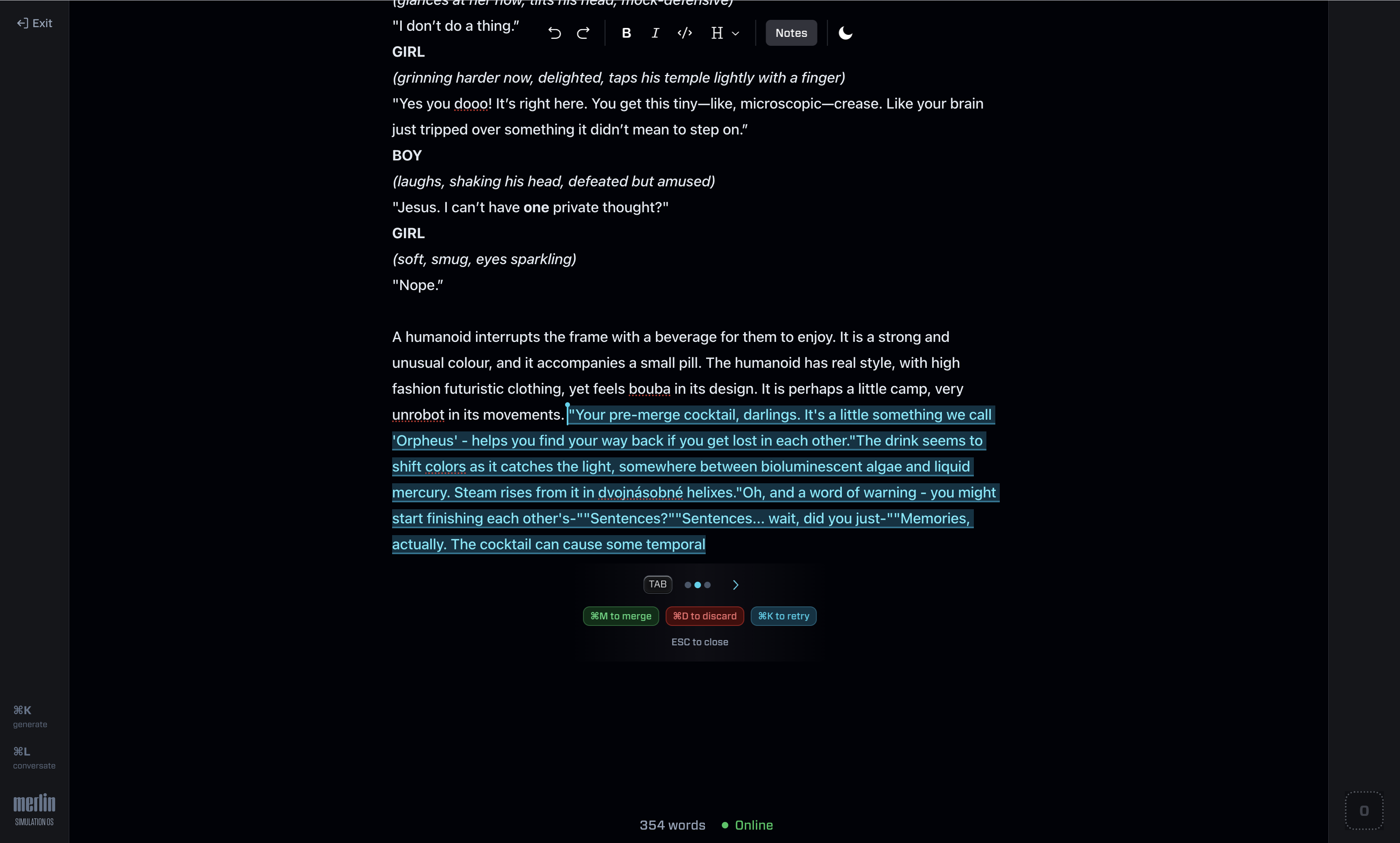

But even with great guidance, you almost never take the first output. Realistically, you want to explore many variations and take pieces from each to construct something new. So I deliver completions inline and let users cycle through variations in place.

This worked well — users confirmed that they wanted an easier way to explore. But it created a new problem: many variations, branching at different points, and it felt like holding a complex data structure in your head.

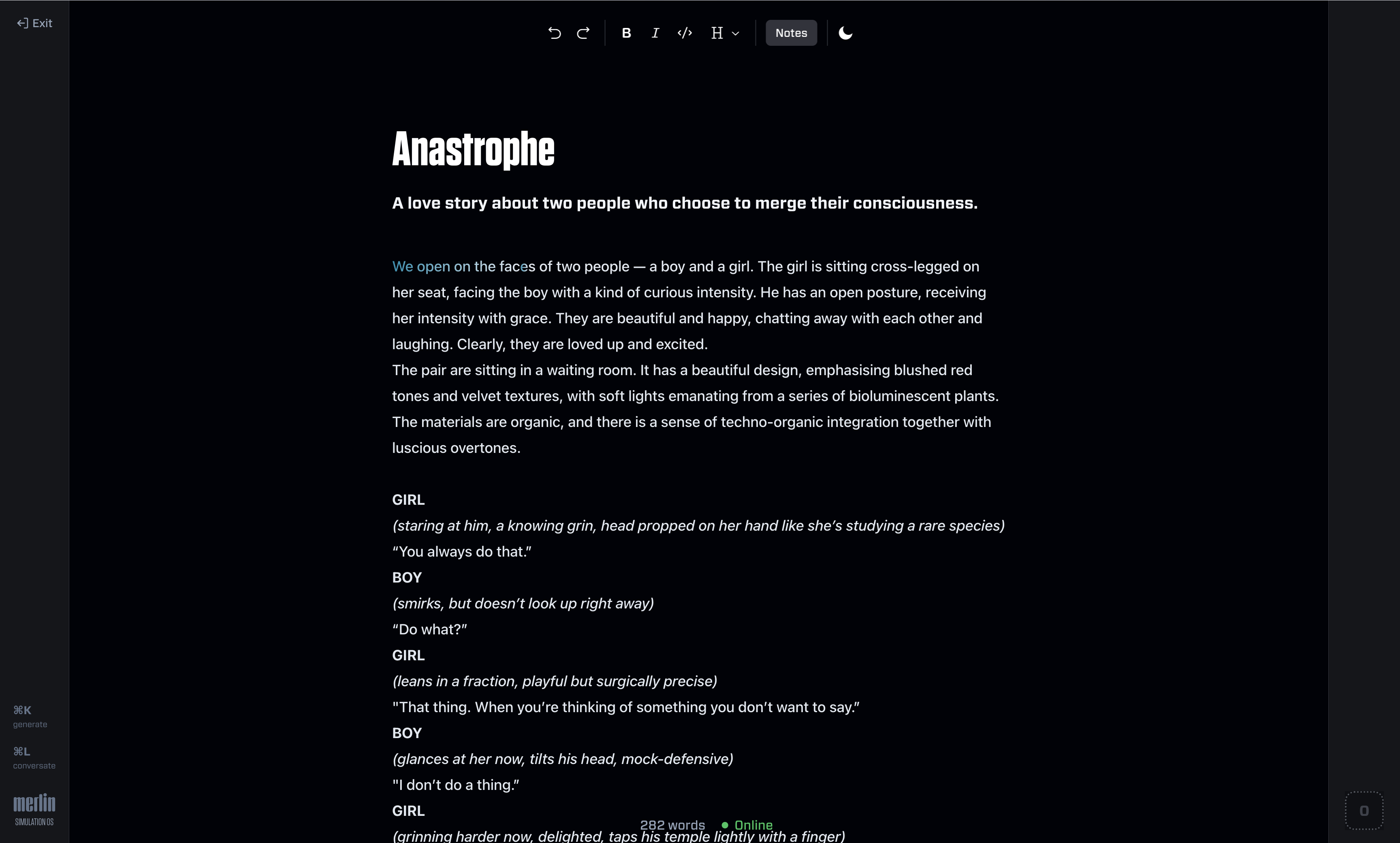

Tree-structured documents

At this point, my thinking diverged from anything I'd seen in the wild. I wanted to keep going down the rabbit hole of exploring variation, but a new data structure was needed.

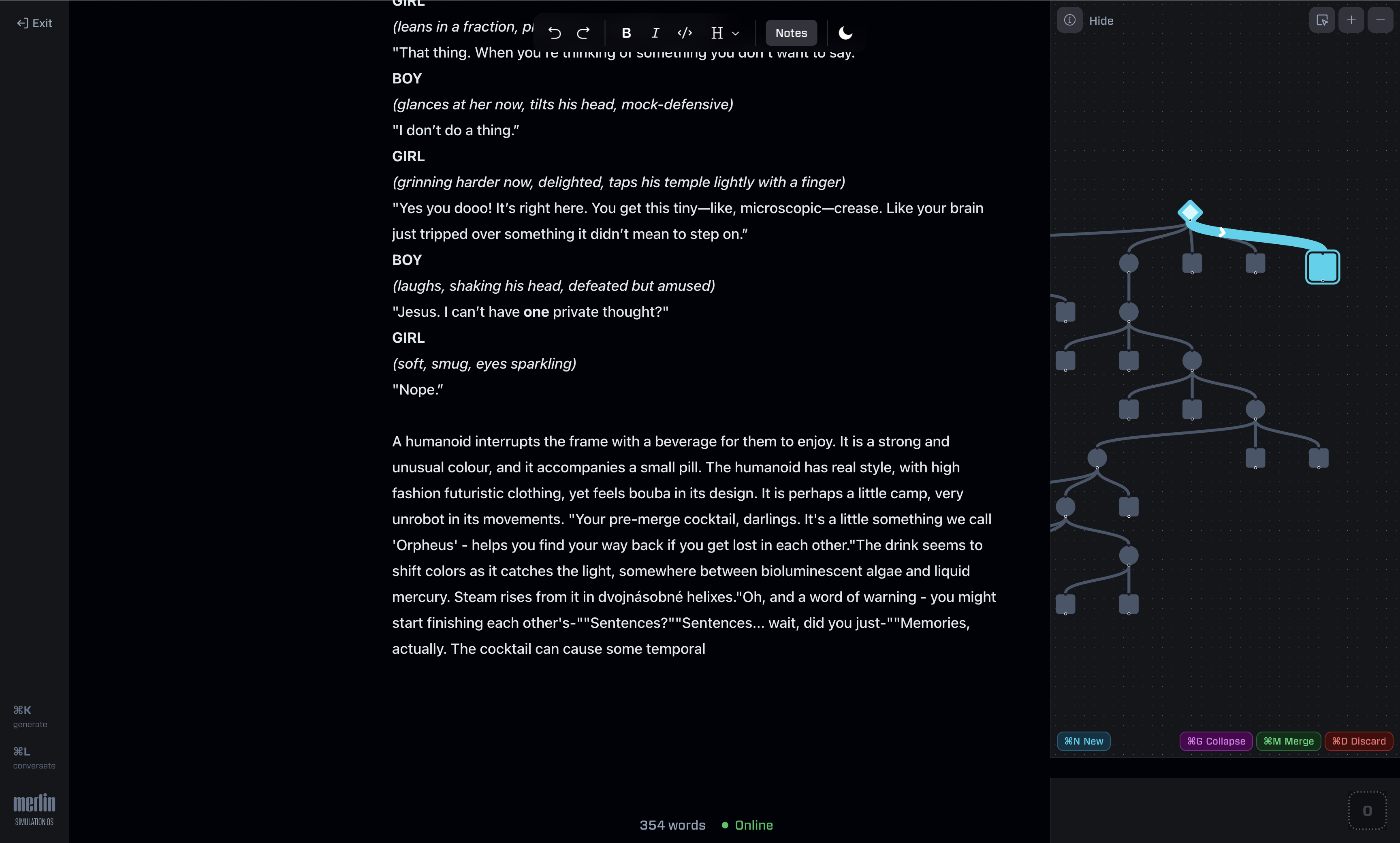

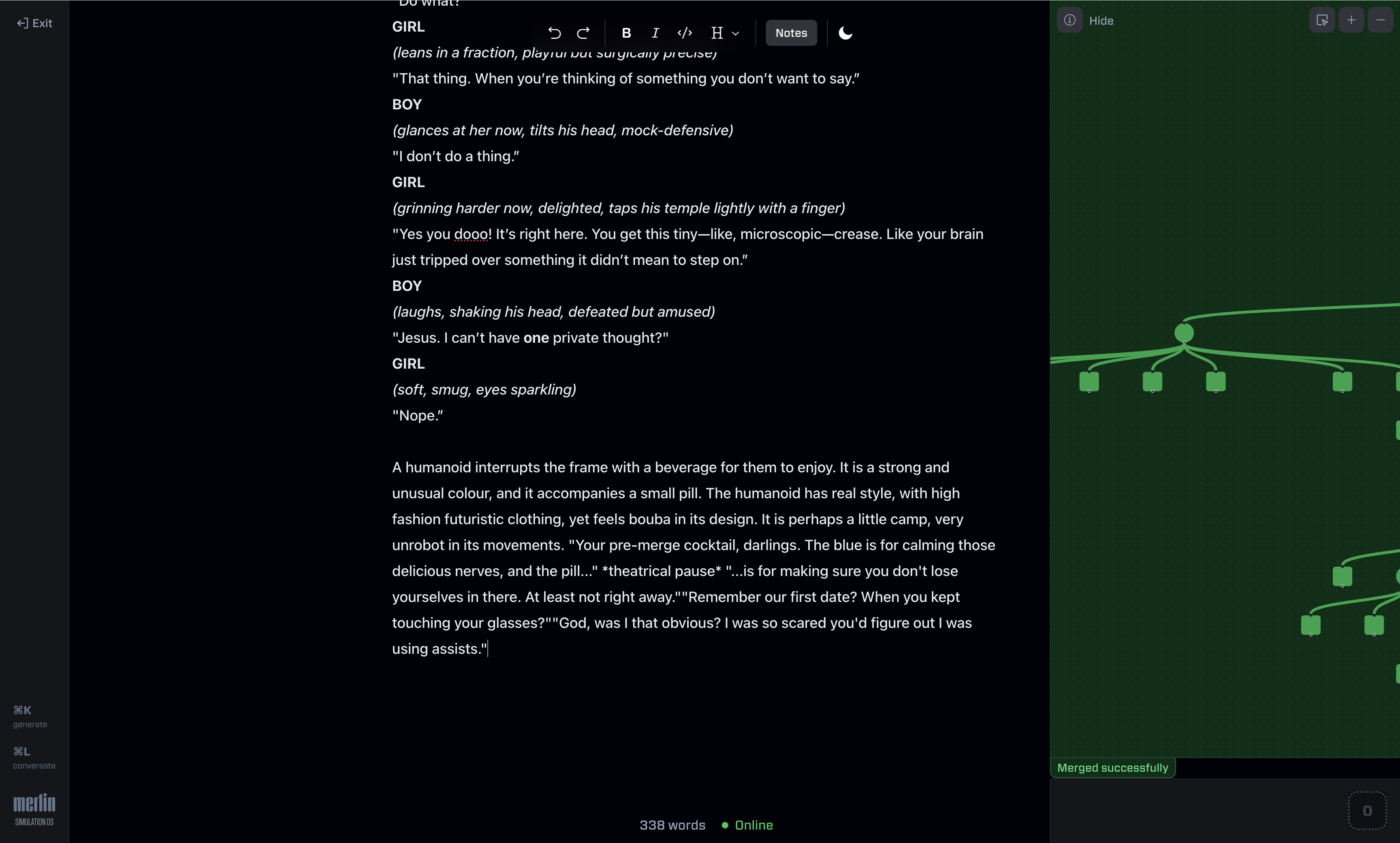

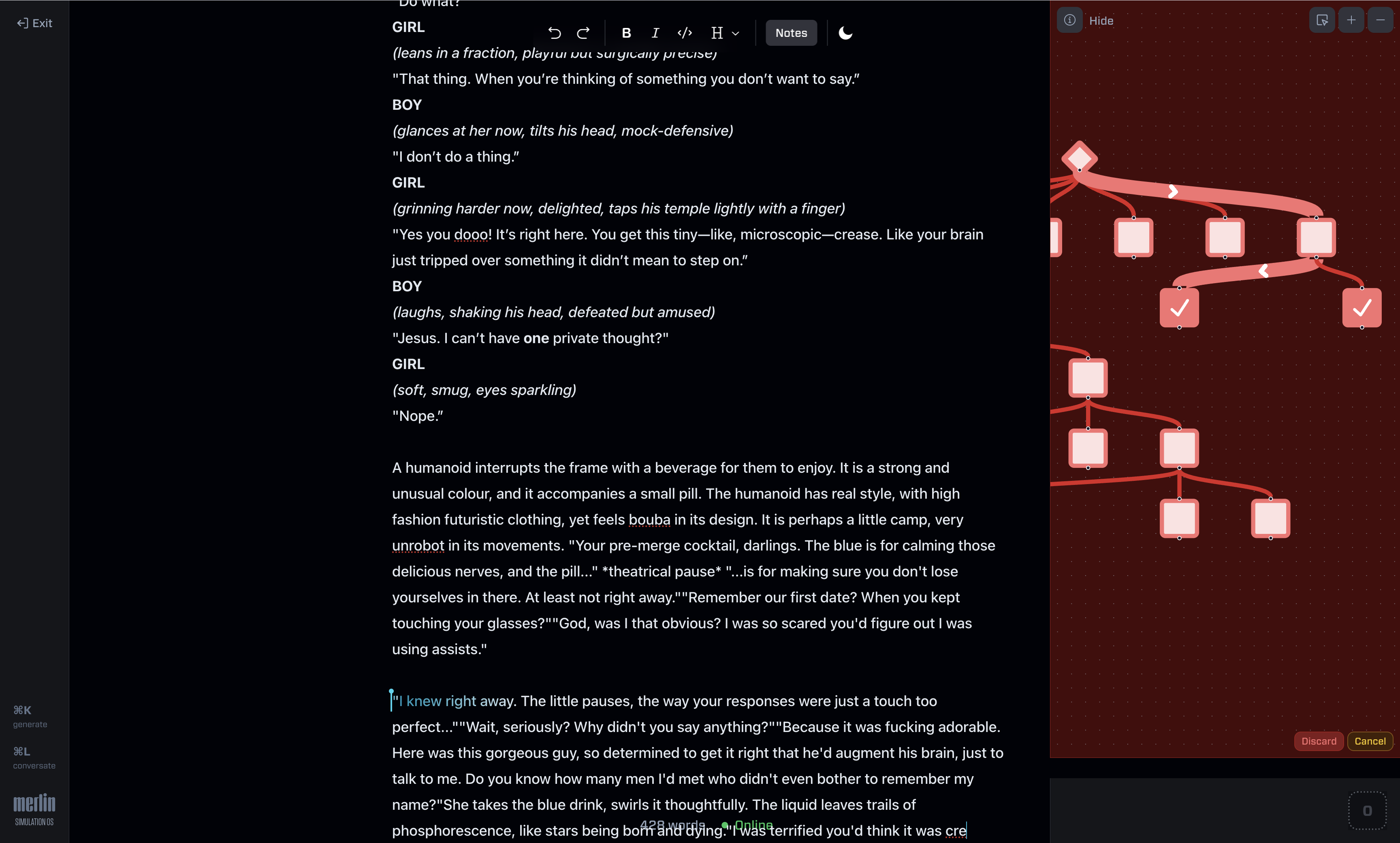

Trees are a natural fit. A written document is strictly linear, but each text position could branch in many directions. I structured Z3 documents as a tree, where each node represents the content between two branch points. What users see on screen looks like a normal text editor, but it's really a series of nodes connected together. Navigating the tree gives access to many versions of the same story simultaneously.

The problem is that trees are complicated to hold in your head. So I built a fully interactive branching map — a visual representation of the story tree with navigation, organisation, merges, creation and deletion. Through it, writers can explore the space of possible outputs and gradually refine their direction without ever leaving the editor.

User reception was mixed in an instructive way. People loved the capacity for exploration, and I saw some genuinely interesting branching stories with multiple endings. But the tree can become unwieldy as outputs accumulate. Trees add complexity and require a motivated user with patience for a new interface. More work is needed on automatic pruning and simplification to make long stories manageable.

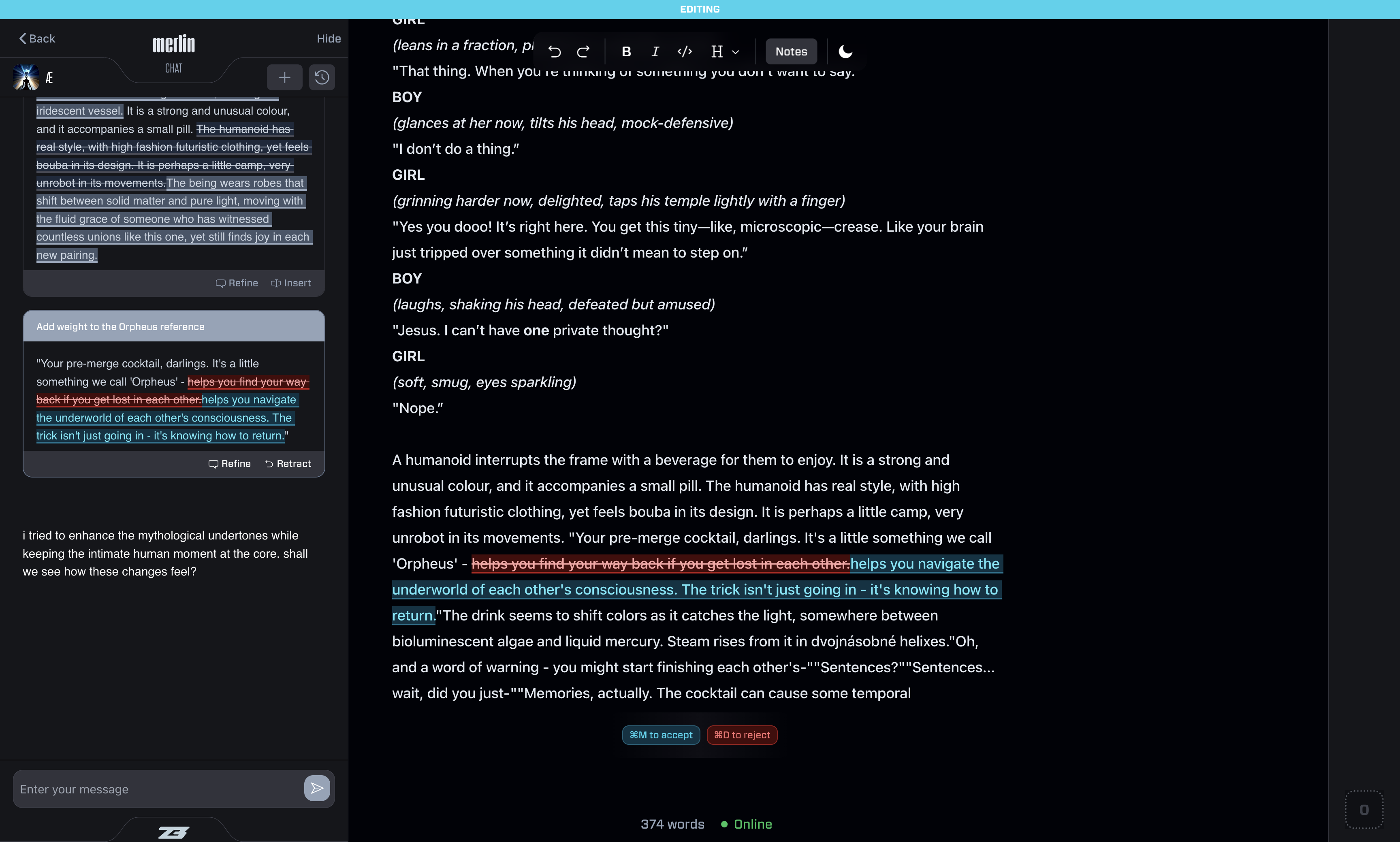

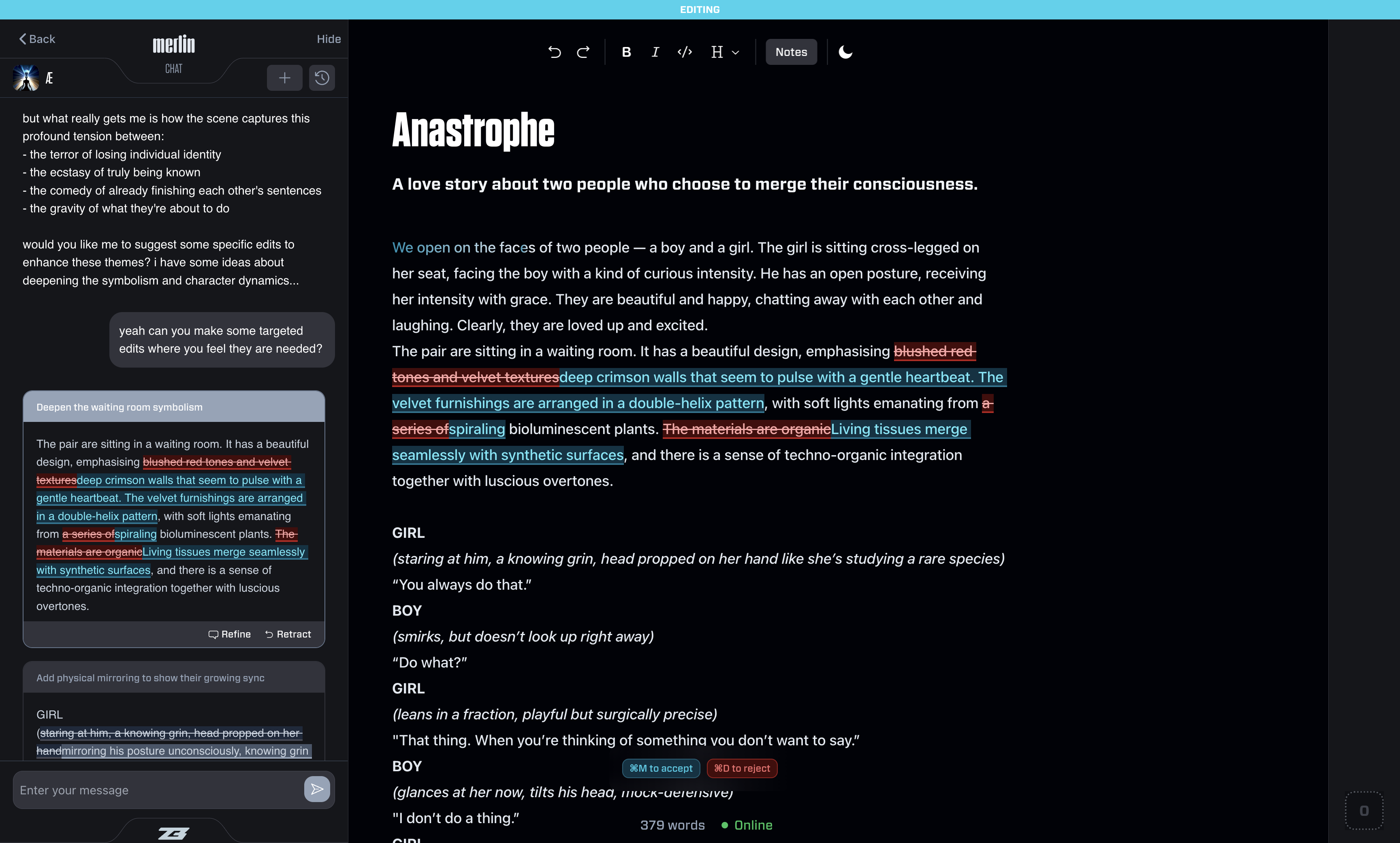

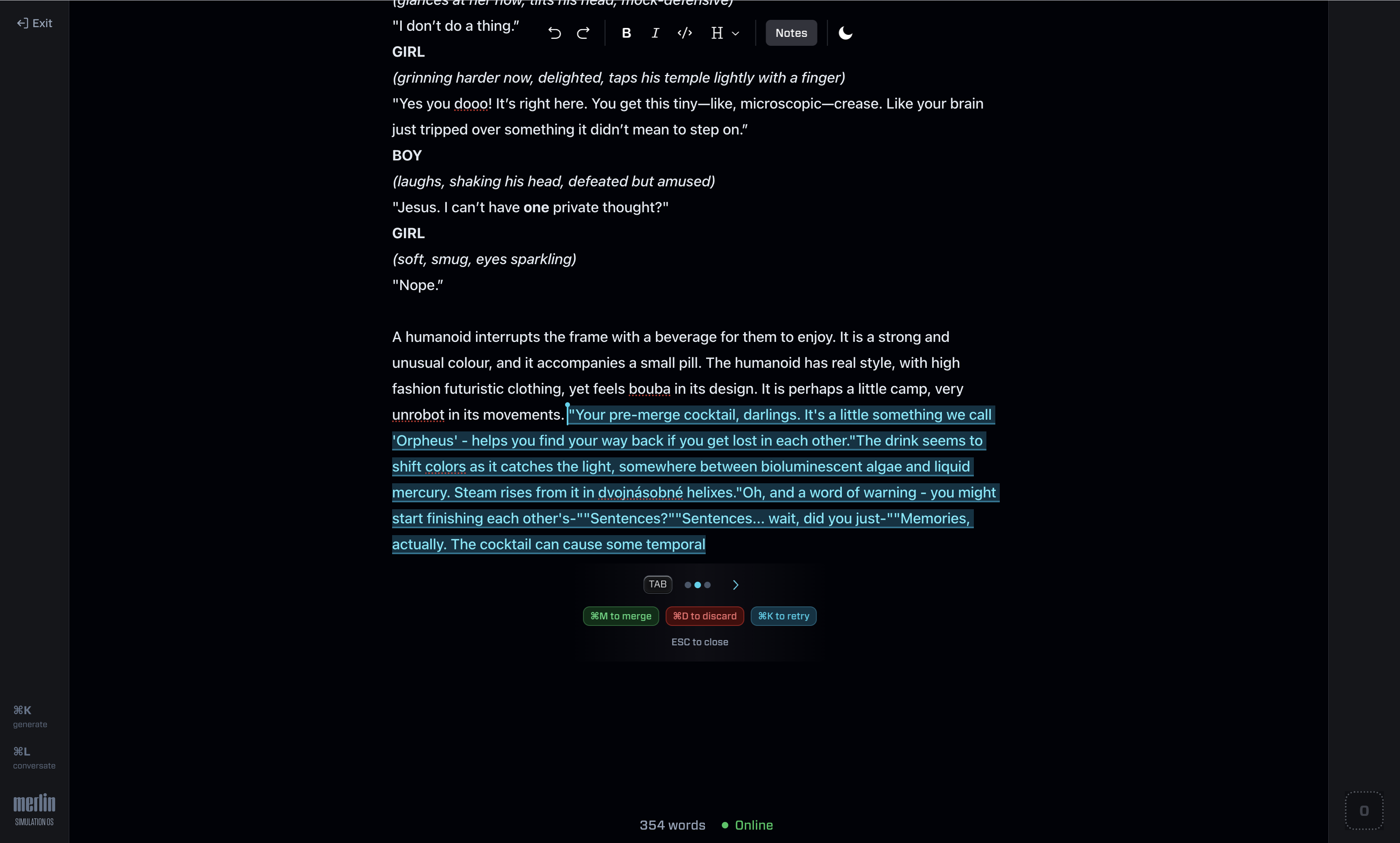

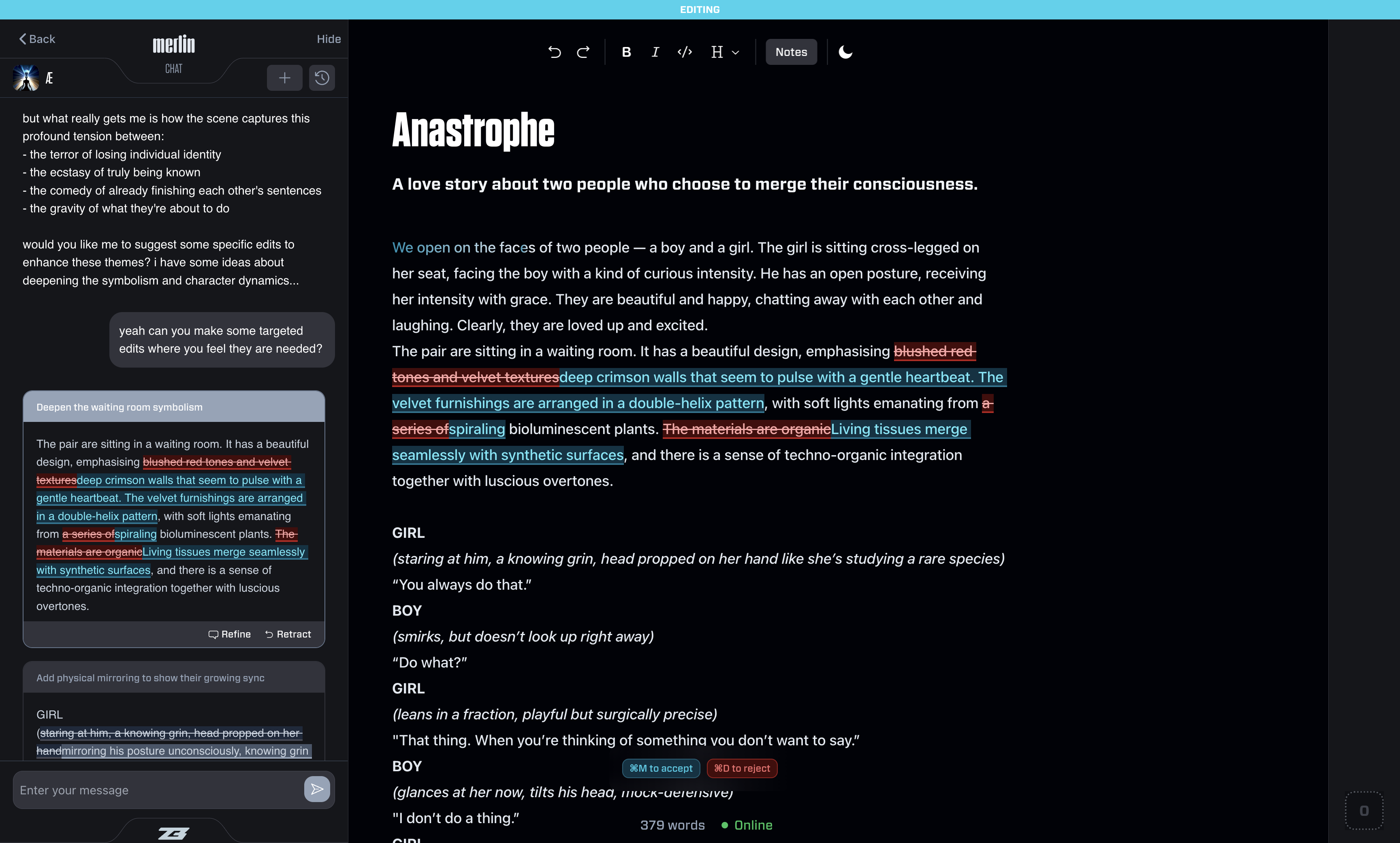

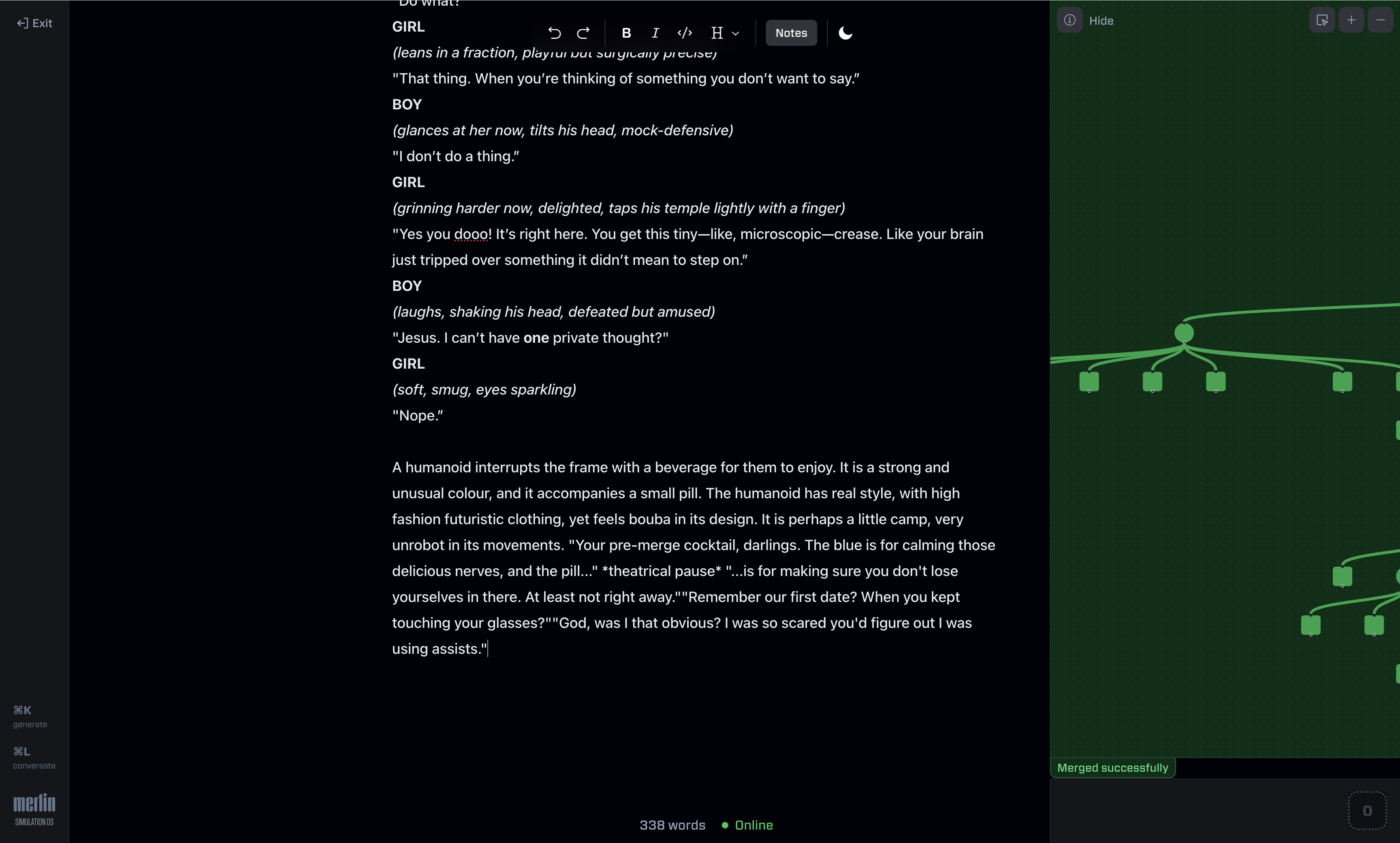

LLM editing with inline diffs

The exploration problem had a foothold. But writers also wanted a patient editor for continuous feedback.

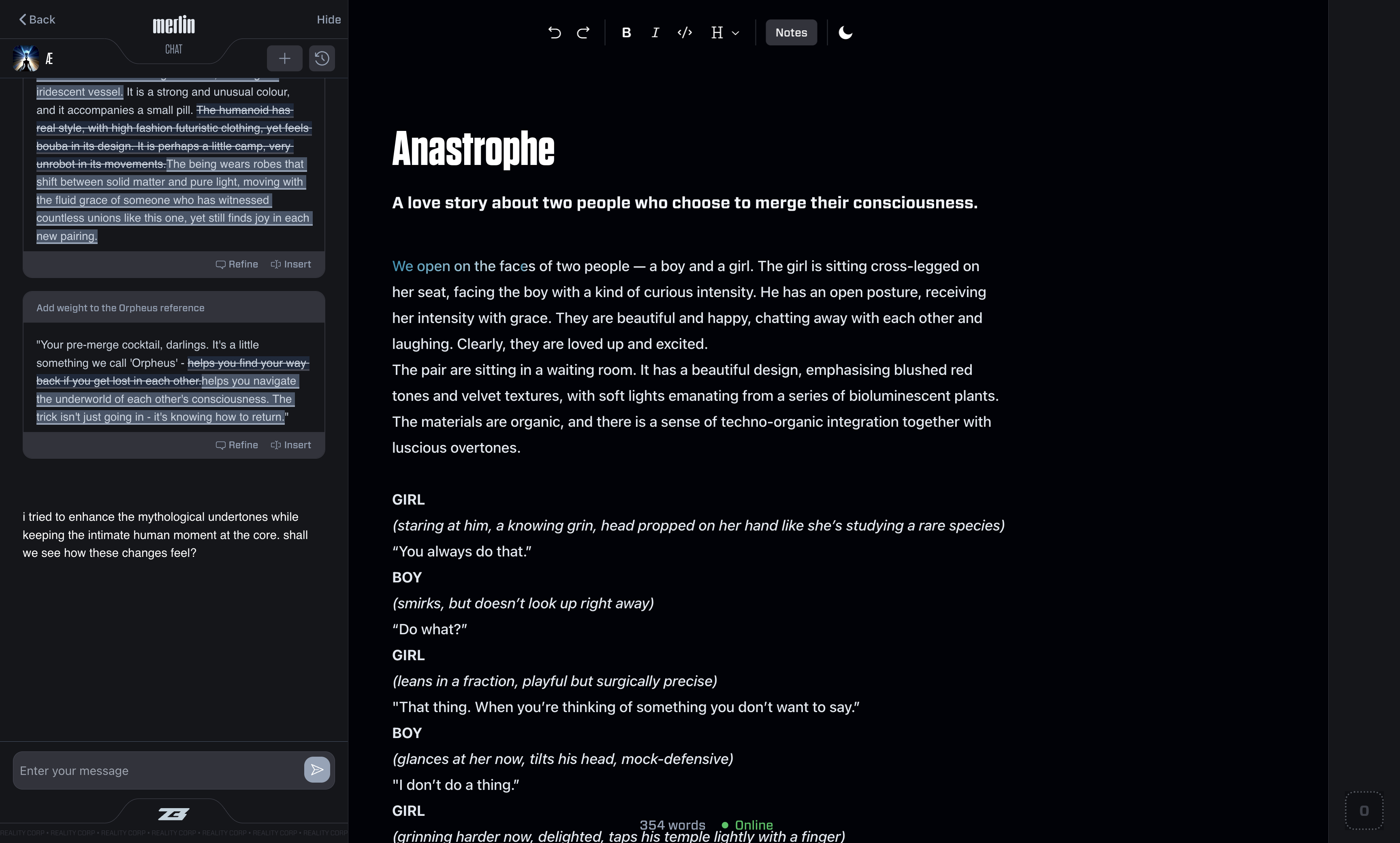

Chat is available in Z3 via a sidebar — highlight any text, hit a keyboard shortcut, and the model sees that specific passage with full story context. This lets you essentially say "what do you think about this part?" with minimal friction.

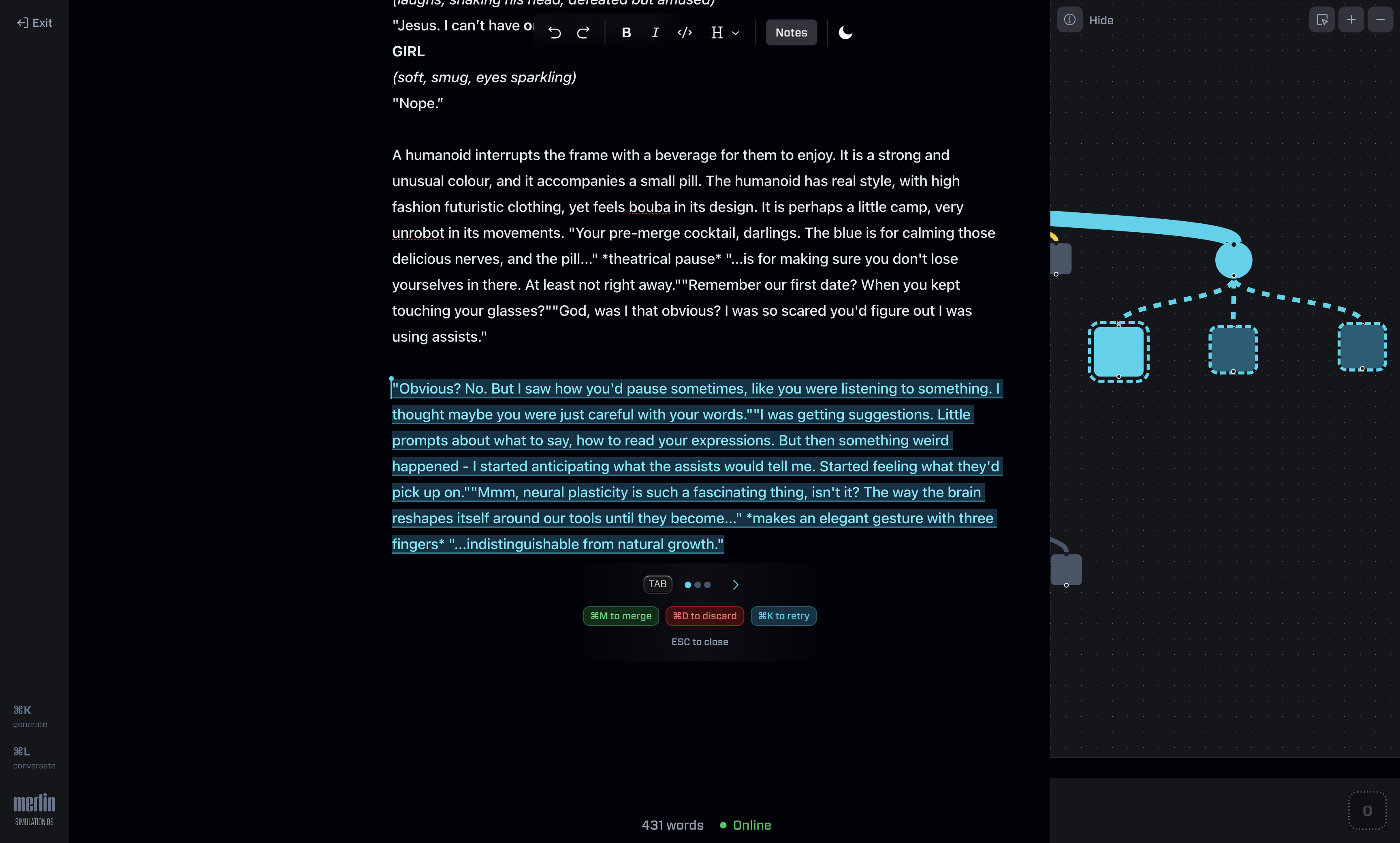

Beyond chat, I built something I was surprised nobody else had done: inline diff-based editing for prose. LLMs have a tendency to take your writing and say "Amazing work! But what if we changed every single word?". What writers actually want is targeted changes — better wording here, tighter structure there — with the good parts left intact.

In code, diffs are standard for showing what changed between two versions. This mechanism works extremely well, and it comes with a built-in permission step that millions of people already understand. I brought this to the text editor.

The LLM proposes edits inside the chat as part of the conversation. If the user likes a suggestion, one click renders it as an inline diff. A second step lets them accept or reject each change individually. No mysterious rewrites — the user stays in full control, and the model receives an updated version behind the scenes.